Virtual Desktop Infrastructure (VDI) is very complex. Many companies set out to build a Windows-based VDI or DaaS (Desktop-as-a-Service in the cloud) offering for their users but poor planning and execution can lead to hitting brick walls which ultimately lead to projects stalling out or outright failure, as in scrap it completely and do something else after much time and money spent. VDI can answer pretty much any business use case but requires the foundation to be built correctly. Once the foundation is built correctly VDI can be very flexible and rewarding for your company. This is a “cheat sheet” of some of my tips for building FOUNDATIONAL VDI with a focus on user experience and security. This knowledge comes from years of field experience building many generations/evolutions on Microsoft, Citrix, and VMware VDI centric solution stacks as well as tons of great info from my peers in the community.

BEFORE you begin your VDI journey…

Business justification

Always have a clear cut use case for what you’re building. Just building something and then hunting for use cases means the solution won’t quite fit the use case you’ve found in most cases without a lot of redevelopment of what you’ve built. Which means angry users as you scramble to “fix” VDI to make it work for the use case. Don’t be a reactive VDI implementer. Be proactive and build the foundation for VDI correctly up front so you can answer any use case that comes up in the future.

Budget

VDI is extremely expensive when you build it right. If you do it right you will require several point solutions to make it function well for your users. Any vendor that comes to you and says they can do it all is selling you a dream. There’s no way around needing some extra pieces in place if you want it to function correctly and have happy users. If you don’t budget for these solutions up front, you will be in for a rude awakening when you see the total cost of ownership (TCO) as your project progresses. If you are doing VDI as purely a cost-cutting measure, you have no concept of what VDI entails and have already started out the project on the wrong foot. Understand the total cost of ownership as well as per user cost to run your service before you begin. Ensure you have a realistic budget.

Know your limits

You have existing point solutions in your desktop and server environment and common sense + simple economics may dictate attempting to use some of these solutions for your VDI or SBC environment. Many times these solutions weren’t built for dynamic provisioning, single image management, non-persistent user sessions, or multi-user shared targets. As a result, your VDI environment suffers until you can pinpoint the cause. Let me save you from some PTSD, vet out how well your existing solutions work on these types of environments before you use them. Do your research. If these point solution vendors don’t have whitepapers with a simple Google search that show it working, be wary. If the sales team of the solution has to “check internally”, more than likely you’re going to get fed a line of BS soon so begin looking for something else. Talk to people with a lot of EUC (end-user computing) experience in the areas of VDI (virtual desktop infrastructure) and SBC (server-based computing) to get a better understanding of how complex VDI is and what all works with it and what doesn’t. There are many websites out there and videos on YouTube put out by passionate people in the community trying to do good in the world that can give you a better understanding without all the sales noise. What really works and what doesn’t, what you need vs. what is a waste of time and money, etc.

Set expectations

A virtual desktop will never be an identical experience to a physical desktop. You will need to make concessions on performance and what apps can be delivered into or via VDI and SBC. Many people don’t realize this until well into a project. At the end of the day, the solution is being streamed to you from a datacenter or cloud and is not something you can take offline with you like a laptop. It’s running elsewhere and with many layers. You can make it seem pretty close to a physical machine but it will never truly be an identical experience. When users get a taste of something bad, it’s very hard to go back and win them over later. Measure and define every expectation from how long it takes to log in to what kinds of apps can be run in the virtual desktop. Set these expectations up front with the users you are building the VDI solution for.

Be prepared to persevere

There is no magic bullet for VDI. I wish I could tell you everything will be perfect but it won’t be. VDI is complex and has dependencies in your environment you don’t even know about until you begin your journey. Every environment is unique. The bigger your IT environment is with the more cooks in the kitchen engineering and changing things around you to meet their own goals for the year, the more you should be prepared to persevere and react in an agile way to these challenges. Sometimes these don’t always align with your VDI goals. Be prepared to work together with people in your company you may not have worked with before. Everyone in IT is on the same journey, trying to make the environment better for your company. Sit down and spend time to understand everyone’s needs and work together toward that goal.

Identifying and building Foundational

VDI by targetting the dependency layers…

IAM – Identity and Access Management

Identity and Access Management (IAM) is the key to enterprise mobility and can make or break your VDI or SBC environment. You can build the most expensive house in your neighborhood but if the front door is locked up tight and your family can’t get in easily to actually live there, then you have wasted your time and money building such an expensive house.

- Authentication (aka AuthN) – is usually the core part of your access management strategy and is where user ID/password, certificate-based authentication, security keys, biometrics, password-less auth using mobile authenticators, etc. come into play. The user will use any of these authentication methods to authenticate and receive an access token from the identity provider (IdP), such as Microsoft Azure AD for example.

- Authorization (aka AuthZ) – is part of the authentication flow and is initiated after using one of the authentication methods above. Authorization is what answers the “okay I’m in, now what?” question using an “if this, then do that” approach. If using Azure AD for example, the user has an access token which will trigger Conditional Access policies which get applied at this point. This is where Zero Trust methodology can come into play as well. You inherently don’t trust anyone whether on a managed or unmanaged device, internal or external in this model. Instead of just user identity, device, or network segment, you look at these plus multiple other characteristics about the user session and choose what they get to see or do as well as continuously evaluate these characteristics so the moment something changes, what they get to see or do changes as well. Many in the security industry say identity is the new perimeter since it enables all this capability.

Cloud-based IdPs (identity providers) I like are Azure AD and Okta UD. Adaptive authentication that provides contextual/conditional access is key and both these identity providers can help there. Azure AD + Azure MFA using Microsoft Authenticator is powerful. Okta UD + Okta MFA using Okta Verify is simple to deploy and powerful as well. Both Microsoft and Okta offer password-less sign-in options as well if your company has a password-less directive (which it should!). Don’t forget when using modern authentication like SAML or OpenID Connect (OIDC is the authentication layer on top of OAuth authorization), that they are web-based authentication meaning Windows OS can’t natively consume it on the backend. You will need a middle-man deployed to translate modern auth to something Windows can understand. If you use Citrix Virtual Apps and Desktops, you will need Citrix Federated Authentication Service (FAS) deployed and talking to your Microsoft CA (certificate authority) to complete SSO using short-lived certificates. You can use an on-prem HSM for key protection or even leverage the cloud-based Azure Key Vault. If you use VMware Horizon, you will need True SSO in VMware Identity Manager (VIM).

Biometric access (something you are) in favor of PIN codes (something you know) is becoming more popular to prove your identity as part of 2FA or MFA (2-factor authentication or multi-factor authentication). Ensure the biometric systems you use have secure enclaves so this data or any metadata remains localized and is never transmitted over the network. Some systems store metadata on servers you don’t control and once this information is compromised and reverse engineered, it can lead to severe consequences on a biometric factor (a part of you like your thumb, face, retina), that can’t be changed.

When thinking about password-less access control mechanisms for VDI, many modern access management methods you come across will work just fine. I have used software authenticators like Microsoft Authenticator and Okta Verify in a password-less configuration with VDI gateways successfully (here is an example). FIDO2 compatible hardware security keys like the YubiKey 5 and the upcoming generation of HID Crescendo smart cards (that combine a proximity card for facilities access + traditional PIV + FIDO2) that extend WebAuthn are great options now and work fine with VDI as long as the identity provider can support them. The YubiKey 5 NFC for example now supports NFC and FIDO2 with Apple devices. The Feitian BioPass FIDO2 Security Key or eWBM Goldengate FIDO2 security key actually have a biometric reader built-in so you can use your fingerprint locally to prove your identity instead of just button-pushing which I really like. Feitian is who is behind Google Titan Security Key which uses the older FIDO U2F standard. FIDO U2F should be considered legacy, with FIDO2 being the successor and what Microsoft supports for password-less sign-ins with hardware security keys. Do not confuse a FIDO U2F compatible security key with a FIDO2 compatible security key, only the latter will work for password-less authentication.

The WebAuthn web standard client API by W3C that the FIDO2 project’s CTAP2 (client to authenticator protocol) from the FIDO Alliance depends upon, in general, is still very new so support continues to develop at a rapid pace every quarter. I am seeing more public consumer web companies like Google, Facebook, Dropbox, etc. supporting the direction right now which will eventually trickle into enterprises by way of compatible IdPs. Windows Hello for Business comes up frequently but since VDI is protected by a gateway typically, this will not apply today. I generally tell all my Microsoft Windows-based customers to go to this site on Microsoft’s password-less stance and scroll down to the “products to get started section”. Out of these 4 methods, password-less phone-sign using Microsoft Authenticator is the one you will want to focus on when talking about VDI and gateways today. As things mature we will see some of these others continue to evolve their user experience and support for VDI. I would advise companies to plan in the long term for several of these modern password-less authentication mechanisms for their organization. Practically, that’s going to be a combination of Windows Hello for Business, Microsoft Authenticator, and FIDO2 compatible keys.

Most organizations are only just starting out on their password-less journeys. It is very important to continue to protect your user’s passwords as you move toward a password-less state for your users. I urge you to take at the very least some basic steps to protect user passwords annually until you achieve your password-less goal. An example would be enabling Azure AD Password Protection to help prevent against common password spray attacks. This works with both Azure AD and regular on-prem AD so there is no reason why you shouldn’t enable this.

Resources

What are you building it on? Host servers, hypervisors, and storage….where to begin? Traditionally storage was the big bottleneck for VDI in the early days. Spinning disks with single image management did not work well. You were stuck buying very expensive at the time solid-state drive (SSD) arrays because you didn’t want to waste your precious monolithic SAN storage on end-user related storage. The advent of software-defined storage (SDS) and hyper-converged infrastructure (HCI) solved this problem.

Many hardware vendors began creating white papers and reference architectures of their solution with whatever VDI solution you intended to use (Citrix, VMware, Microsoft, etc). Don’t fall for it. It’s one or two people at a hardware vendor writing a guideline on what you may expect but it’s not a real-world “this is exactly what you are buying and your users will experience” legally binding document. Pointing at that reference architecture diagram when your users have their pitchforks out isn’t going to do you any good. Always validate the hardware yourself. Buy small and build-up. Doing a giant deal for hardware up front for better discounts is a sales tactic, don’t fall for it.

It is still recommended to use SSD for infrastructure VMs. SQL and Controller speed impact login times, mainly as the session is brokered. If using cloud-based brokers running as a PaaS (platform-as-a-service) solution such as Citrix Cloud Virtual Apps and Desktops (VAD), this piece is often out of your hands.

For the actual virtual desktops, for a long time, you could get away with 2 vCPU and 4 GB RAM. These days with Windows, Office, and other apps needing more RAM than prior versions, the 2 vCPU and 8 GB RAM sized VMs is more popular for basic task worker VMs. This should be your baseline virtual desktop specs in most cases. I am doing 4 vCPU and 8 GB RAM as a more “universal” virtual desktop baseline these days at companies. Content-rich/ad-stricken websites and security agents are all contributing to the need for more resources for each virtual desktop to maintain a good user experience.

Each vendor has BIOS suggestions with hypervisors for VDI workloads. Ensure you are following these to tweak power and CPU settings among others. I have an example of some HP and VMware ESXi guidance I have used successfully here but please validate this with your hardware rep for the latest guidance they have for the model you are going to use.

It’s common to build your boxes to around 75%-80% capacity. All it takes is one DR event for your VDI environment to peg out at 100% across all your boxes beyond any peak-load you ever anticipated. Use something like ControlUp Insights to monitor this over time. Just because you built VDI for a 75-80% workloads in year 1 doesn’t mean it will stay that way. Software changes, people’s workloads change, new use cases are found and on-boarded….all this impacts your hardware decision making. Don’t get comfortable, always analyze and proactively scale and distribute your workloads with the 75-80% figure in mind.

These days many people are using HCI in the datacenter, the most popular one I see being Nutanix followed closely by Cisco HyperFlex. Both these solutions are built with cloud in mind as well. Many people opt for a hybrid approach to VDI these days. Scale in the datacenter, but your secondary location is not a colo or datacenter you own but rather a cloud IaaS (infrastructure-as-a-service) location such as Microsoft Azure, Amazon Web Services (AWS), or Google Cloud Platform (GCP). Sometimes even multiple clouds which is called a “multi-cloud” strategy where you attempt to disassociate the intricacies of each cloud vendor from your core computing needs. Where they can shift seamlessly between your datacenter locations and these cloud locations, thereby making the resource layer a commodity. Drop the workloads wherever it’s cheapest at that moment in time and when the price drops somewhere else, move it there automatically. This was a dream several years ago but quite possible these days.

I do a lot of VDI in Azure simply because many companies want to shift from a CAPEX (capital expenditure) model to OPEX (operating expenditure) model when having to pay for VDI workloads. It’s much easier to justify VDI when there are little to no sunk costs in your own private cloud on-prem and instead you look at it from an annual subscription standpoint in a public cloud. I want to point out this guidance from Citrix that many people in the EUC community had a chance to review:

https://docs.citrix.com/en-us/tech-zone/design/reference-architectures/virtual-apps-and-desktops-azure.html. A lot of what is covered here is very generic so you don’t have to use Citrix Virtual Apps and Desktops (CVAD). These cloud principles for VDI extend to VMware Horizon and Microsoft WVD as well. I foresee a continued enterprise drive of hybrid cloud deployments where some resources are going to be on-prem, any many resources are going to be in the cloud in an OPEX model for both production and DR (DRaaS or Disaster Recovery-as-a-Service). I even work with some customers that have graduated to a hybrid multi-cloud approach where they don’t care which vendor’s cloud the workload sits, they build up virtual desktops from a single master image in the cheapest region and then destroy the VMs and build up elsewhere whenever the price goes up or a cloud vendor is suffering a service disruption/outage.

Virtual desktops in Azure may appear cheaper to run than on-prem since storage is destroyed instead of the VM just being powered off only like with expensive HCI. Though with Nutanix on-prem and it’s dedupe capability this is often times a wash. The only real design question is would you like to pay for resources on consumption or upfront in most scenarios. You can often lower your costs up to 80% in Azure by purchasing Azure Reserved VM Instances (RIs). With Azure Reserved Instances, you get a big discount and Microsoft has an easier time planning infrastructure in their data centers so they incentivize you to use this. This is a big savings for VDI where we typically scale wide with a 1-to-1 Windows 10 OS more often than scaling up for density with Server OS or Windows 10 Multi-session OS.

Spend time to understand where your IaaS workloads should go. Use the Azure Speed Test tool to see which Azure IaaS regions are most optimal for your company and where your users sit. Also, keep in mind of legal/compliance/governance when choosing an Azure region. This advice extends to any cloud provider you decide to use. If you prefer AWS as your public cloud, then use the AWS Region Speed Test tool to measure and find your optimal regions. The Microsoft WVD team has also released a Windows Virtual Desktop Experience Estimator tool to help understand where best to drop a workload as this is a critical concept for large global VDI deployments.

DO NOT begin your VDI journey by putting apps into a master image assuming your resources won’t be a bottleneck. Apps and images is NOT where you start. You need to deploy a Windows ISO on your resource layer (hypervisor or cloud) that is NOT domain joined and simply do a performance test to see what you are working with. This is your baseline. Use IOMeter, ProcMon, etc. to take these data points. Then with a domain joined VM, start using ControlUp, LoginVSI, etc. and take those baselines. That difference right there alone is big and will show you many problems in your environment before you even get to thinking about apps. DON’T RUSH past this CRITICAL step!

Monitoring & Reporting

Performance should be captured in every moment of your journey. For Citrix VAD environments, Director is free so use it. Perfect for Service Desk personnel as well. For VMware Horizon environments, the Horizon Help Desk Tool in the Horizon Console or vRealize Operations (vROps) can be used in a similar way. You can also use the standalone Horizon Helpdesk Utility which is much faster than the HTML5 version in Horizon Console.

ControlUp is the de facto standard for VDI or SBC management and monitoring in my opinion. I’ve been using it since around 2011 and it’s always one of the first things I implement from day 1 for any VDI project due to the sheer simplicity of the tool. It will help you build and troubleshoot issues quicker during your build as well as operational phases so don’t think of it as something you do after, get your ControlUp agents deployed upfront and start gathering metrics from the beginning.

Any solution you choose should also have long term reporting statistics available in addition to real-time stats. Realtime stats is for at a glance or troubleshooting purposes, reporting stats are for ensuring you stay employed. I have been in many environments where I have asked Citrix admins what apps they are delivering in their environment or how many people are using virtual desktops and they have no way of answering. This is bad. If your boss’s boss comes to you and asks, “What do you do here?” and you say “Citrix” or some other brokering vendor’s name, he will say “I don’t know what that is.” and have no idea of the business value you bring to your company. You and your position are now insignificant to him. If you say “I help deliver Important App 1, Important App 2, Important App 3, and a secure virtual desktop available from anywhere on any device which generates $100 million dollars in revenue for the company”, your boss’s boss will say “WOW! You’re an important person in this company, here’s a raise! Keep it up!”. Always know what business value you provide in your organization. Director, ControlUp Insights, etc. all have this capability for you to report on long-term application and virtual desktop launch and usage statistics.

I have used other EUC and SBC focused monitoring solutions with pretty good success, you should consider any of these:

- ControlUp – https://www.controlup.com/

- uberAgent – https://uberagent.com/

- Goliath – https://goliathtechnologies.com/

- Ivanti AppSense Insight – https://www.ivanti.com/products/insight

- Liquidware Stratusphere UX – https://www.liquidware.com/products/stratusphere-ux

- eG Enterprise – https://www.eginnovations.com/vdi-virtual-desktop-monitoring

Operating System & Office Version

Should you do published desktops from a Server OS for better density or true 1-to-1 virtual desktops using a Desktop OS? These days I steer people toward the Desktop OS more so than before. Microsoft is moving away from a GUI for Server OSes. Having end users on a Server OS that’s trying to mimic a Windows 10 OS means a lot of extra development for Server OS engineers at Microsoft in my opinion. It doesn’t make sense to have Desktop code bits loading down your Server OS codebase, just leads development to be slower trying to do a combined platform. With the advent of Windows 10 Multi-Session, we can clearly see Microsoft wants you to use a Desktop OS for end-user logins and UI interaction rather than a Server OS if you are after density and scalability. I think the days of end-user computing on server-based OSes will be on a decline over the next several years.

The version of Office you use with Windows also makes a difference. There are some performance differences between Office 2016 Professional Plus, Office 2019 Professional Plus, and Office 365 ProPlus. Even running Azure Information Protection (AIP) for your Office docs has certain nuances. Microsoft has also changed their support stance on all 3 flavors of Office running on Server OSes starting with Server 2019 so this is yet another reason why you need to be thinking about moving to a Desktop OS (Windows 10) for your VDI platform.

Most all my customers have VDI on Windows 10 and published apps on Server 2016. The latter is now legacy in my opinion. Yup, I just called your baby ugly. Start thinking about how your app publishing environment will look when moving to a Desktop OS. Start cataloging your apps and contacting vendors now to understand where they are headed. In some cases, if a vendor isn’t going to support the Microsoft direction on this, then think about using an alternative vendor or cloud-based web application (SaaS app) for that particular app. That is something you need to work with business units and app owners within your organization on. So don’t wait till the last minute when your back is against the wall. Start planning for the future now.

OneDrive for Business and Teams

OneDrive for Business and Teams has been a nightmare in multi-user or non-persistent environments for years now. So they get their own dependency layer in my cheat sheet. This is where I have done a lot of Citrix ShareFile OneDrive for Business Connector and FSLogix O365 Containers to solve these challenges and ensure the environment is supportable. Both OneDrive for Business and Teams were written for single user persistent (aka traditional desktops). I suspect the Product Managers for these teams at Microsoft didn’t want to support multi-user and non-persistent because it would require a re-architecture of the software itself which meant precious development cycles over many sprints trying to get that done versus features that the wider enterprise needed. It’s all about prioritization when it comes to agile software development. So it was up to the partner ecosystem, namely Citrix and FSLogix to solve this challenge.

With the advent of WVD (Windows Virtual Desktop) the OneDrive for Business and Teams engineering teams are now in a way forced to support VDI. Microsoft has seen what a tremendous use case there is for virtual desktops and virtual apps delivered from the cloud. I’ve heard from some people that these EUC workloads are the #1 workload in Azure IaaS today globally. WVD is designed to help create even more Azure consumption for EUC workloads. This I believe is going to have the very positive side of effect of deep alignment between the WVD/RDS and Office teams toward this goal. There is now very recently released per-machine installs of OneDrive for Business and Teams available specifically to address the requirements of VDI.

Profiles & Personalization – The user’s “stuff”

Profile and personalization data

If you still use Microsoft Roaming Profiles with VDI or SBC, red card, stop your project immediately. You will never succeed trying to bring that legacy baggage into your project. Get this fixed ASAP before tackling other issues.

Citrix VAD offers UPM (User Profile Management) for basic profile management. Citrix WEM (Workspace Environment Mangement aka Norskale) can help with policy and configuration of UPM. VMware Horizon offers UEM (User Environment Manager) for basic profile management and policy management. Both UPM and UEM are good solutions but may not have all the bells and whistles you need for your organization.

I still stand by my claims from years ago that folder redirection is the devil (https://www.jasonsamuel.com/2015/07/20/using-appsense-with-vdi-to-help-resolve-folder-redirection-gpo-issues/). It was often times necessary for VDI. VHD mount technologies like FSLogix Profile Containers have pretty much overcome this challenge now without the need for folder redirection. The OS becomes much happier when it doesn’t have to pull bits from network file paths using SMB. A VHD can be stored on SMB if you wish but the OS will have no idea about it and won’t complain like it does with UNC paths. With Cloud Cache and the ability to use object-based storage like Azure Page Blob, it becomes very scalable as well.

Ivanti AppSense Environment Manager with user profiles stuffed into SQL has been around forever and highly scalable, I know of environments with close to 180,000 seats. EM profiles are cross-OS but FSLogix is not so settings won’t roam from a Win10 virtual desktop to a Server 2016 virtual app. Both solutions have a “last write wins” option in the event of multiple sessions to the same profile.

FSLogix Office 365 Container (really a subset of Profile Container) is the defacto standard to make Office 365 work for VDI or SBC. In 2018, Microsoft realized it too and ended up buying the company. It’s super simple to configure and just works. It gives your users a native Office 365 experience vs. the hodgepodge mess Office 365 can be without it, to name a few:

- Outlook having to run in online mode or

host . OSTs on an SMB share, both horrible choices. - Having to disable Outlook Search

- No Windows Indexing

- Teams installing to Local App Data

It really just comes down to if you want VDI to be successful or not in your environment? If you need it to be

Microsoft has now publicly released information on the details of the “free for everyone” FSLogix entitlement (https://docs.microsoft.com/en-us/fslogix/overview#requirements). With the newer version, you don’t even need to apply a license key anymore. You own FSLogix Profile Container, Office 365 Container, Application Masking, and Java Redirection tools if you have one of the following licenses:

- Microsoft 365 E3/E5

- Microsoft 365 A3/A5/ Student Use Benefits

- Microsoft 365 F1

- Microsoft 365 Business

- Windows 10 Enterprise E3/E5

- Windows 10 Education A3/A5

- Windows 10 VDA per user

- Remote Desktop Services (RDS) Client Access License (CAL)

- Remote Desktop Services (RDS) Subscriber Access License (SAL)

FSLogix solutions may be used in any public or private data center, as long as a user is properly licensed under one of the entitlements above. This means it can be used with VDI for both on-prem or in the cloud.

FSLogix allows for different techniques of high availability and backups for the profile containers you need to consider. Fellow CTP James Kindon has an excellent guide here covering various techniques and their pros and cons, especially when using multiple regions in Azure to store your profile containers and their impact on VDI login times: https://jkindon.com/2019/08/26/architecting-for-fslogix-containers-high-availability/

Group Policy

Single-threaded group policy meant for physical desktops can kill VDI. Do not apply the same GPOs to them as physical desktops and laptops. All that does is give you a performance penalty slowing down logins. Print out your GPO on paper and highlight only the settings absolutely necessary. Put user policy into EM so it runs multi-threaded and computer policy into GPO. Your physical desktops and laptops are persistent and can power through many things with 8 CPUs and 32 GB RAM on SSD. Your non-persistent virtual desktop with 2 vCPU and 4 GB RAM is just a fraction of that computing power and isn’t going to cope well. Have you ever noticed how during user login the system is at 100% CPU utilization? Booting and logging into a Windows system is the hardest thing for the OS to do and poor performing GPO just makes it worse.

In the future, think about getting away from group policy altogether if you can. You can use a small pocket of VDI as a test bed when this type of management is more mature and becomes non-persistent VDI friendly. Things like Microsoft Windows Autopilot and Intune can make it to where you don’t need any GPO at all in your VDI environment so none of the legacy baggage. It gives you an opportunity to start clean. In my opinion, these pieces are good to look at but not mature for this use case yet at this time so you will be stuck with GPO for a bit longer in VDI.

EUC Policies

There are policies that you need to apply at the control plane level that is unique to the brokering technology you decide to use. For Citrix environments, this means Citrix Policies. This is what gives you contextual access of HDX related settings when used with Smart Access or Smart Control including drive mapping, USB redirection, and other lockdown items you need.

Keep in mind of your ordering. The Unfiltered policy should be the most restrictive, it’s your baseline. Everything ordered below it should be the more targeted policies where you can target your exceptions.

Citrix VAD also has WEM (Workspace Environment Mangement aka Norskale) which can do much of what Ivanti can. Just make sure you read up fully on issues and fixes with recent releases toward the end of 2018 and early 2019. Read up on the community forums before updating versions and use what works best for your environment.

VMware Horizon utilizes UEM (User Environment Manager) to help with policies.

Active Directory – the silent killer of VDI environments

Most people don’t realize their Active Directory is crap and how much of an impact it can have on a VDI session. I can’t tell you how many times I’ve seen VDI environments use a domain controller/

Summary of Webster’s points on Active Directory things to check, watch the video for details on each of these points:

- Domain and Forest Functional Levels

- Sites and Services: Global Catalog

- Sites and Services: Connection Objects

- Sites and Services: Subnets

- DNS Reverse Lookup Zones

- Duplicate DNS Entries

- Orphaned Domain Controllers

- Authoritative Time Server

- Domain Controller DNS IP Configuration Settings

- Active Directory Design Guidelines

Virtual Desktops are somewhere between servers and desktops. Your original AD design isn’t going to support them well. You have to be open to making changes in AD in order to support them or your VDI project will outright fail because nothing will perform as it should and no one will know why.

Active Directory Sites & Services is usually one fo the biggest culprits I see being incorrectly configured. The IP subnet range for virtual desktops is talking to a domain controller on the other side of the world. To quickly troubleshoot and assign the IP range to the proper site, you can run these commands on the impacted VM itself:

- nltest /dsgetsite

- nltest /dsgetdc:yourdomain.com

or run this from any AD joined machine if you know the client IP address of the impacted VM:

- nltest /dsaddresstosite:xxx.xxx.xxx.xxx

Virtual desktops are immobile devices that stay in the datacenter so Microsoft’s modern desktop management approach does not fully apply to them right now. Traditionally you use regular AD domain joins so MCS/PVS dynamic provisioning can control machine account passwords, SID, etc. Azure AD Join is a modern desktop management technique but is not meant for the virtual desktop use case and are for physical laptops and Surfaces that move in and out of the office and talk back directly through the Internet. Dynamic provisioning technologies can’t store or manage Azure Device IDs at this time. However, to use modern desktop management and all the features like SSO to Azure AD protected SaaS apps, the virtual desktop will need to talk to Azure AD.

I am seeing more and more companies do Azure AD Hybrid Joins in their virtual desktop environments which is the best of both worlds. You can talk to both on-prem AD and Azure AD where dynamic provisioning will handle the AD interaction of the non-persistent VM. Note, Microsoft does not support Hybrid Azure AD join with VDI as per https://docs.microsoft.com/en-us/azure/active-directory/devices/hybrid-azuread-join-plan under the “Review things you should know” section – “Hybrid Azure AD join is currently not supported when using virtual desktop infrastructure (VDI).” In my experience, it works but if you want to be fully supported by Microsoft, I don’t advise doing this in your production environment. With Microsoft’s heavy focus on WVD (Windows Virtual Desktop) and multi-session Windows 10, I am sure this support stance will change in due time.

If you are ever in doubt and see some weirdness happening in a virtual desktop you need to troubleshoot, the first command I always like to use is: dsregcmd /status in a command prompt which will give you a lot of detail on how the virtual desktop is interacting with the domain. In an Azure AD Hybrid Joined virtual desktop it should look something like this, the key fields being AzureAdJoined and DomainJoined both having a YES value:

+----------------------------------------------------------------------+

| Device State |

+----------------------------------------------------------------------+

AzureAdJoined : YES

EnterpriseJoined : NO

DomainJoined : YES

DomainName : YourDomainName

+----------------------------------------------------------------------+

| Device Details |

+----------------------------------------------------------------------+

DeviceId :

Thumbprint :

DeviceCertificateValidity :

KeyContainerId :

KeyProvider : Microsoft Software Key Storage Provider

TpmProtected : NO

+----------------------------------------------------------------------+

| Tenant Details |

+----------------------------------------------------------------------+

TenantName :

TenantId :

Idp : login.windows.net

AuthCodeUrl : https://login.microsoftonline.com/xxxxxxxxxxxxx/oauth2/authorize

AccessTokenUrl : https://login.microsoftonline.com/xxxxxxxxxxxxx/oauth2/token

MdmUrl :

MdmTouUrl :

MdmComplianceUrl :

SettingsUrl :

JoinSrvVersion : 1.0

JoinSrvUrl : https://enterpriseregistration.windows.net/EnrollmentServer/device/

JoinSrvId : urn:ms-drs:enterpriseregistration.windows.net

KeySrvVersion : 1.0

KeySrvUrl : https://enterpriseregistration.windows.net/EnrollmentServer/key/

KeySrvId : urn:ms-drs:enterpriseregistration.windows.net

WebAuthNSrvVersion : 1.0

WebAuthNSrvUrl : https://enterpriseregistration.windows.net/webauthn/xxxxxxxxxxxxx/

WebAuthNSrvId : urn:ms-drs:enterpriseregistration.windows.net

DeviceManagementSrvVer : 1.0

DeviceManagementSrvUrl : https://enterpriseregistration.windows.net/manage/xxxxxxxxxxxxx/

DeviceManagementSrvId : urn:ms-drs:enterpriseregistration.windows.net

+----------------------------------------------------------------------+

| User State |

+----------------------------------------------------------------------+

NgcSet : NO

WorkplaceJoined : NO

WamDefaultSet : YES

WamDefaultAuthority : organizations

WamDefaultId : https://login.microsoft.com

WamDefaultGUID : {xxxxxxxxxxxxx} (AzureAd)

+----------------------------------------------------------------------+

| SSO State |

+----------------------------------------------------------------------+

AzureAdPrt : YES

AzureAdPrtUpdateTime :

AzureAdPrtExpiryTime :

AzureAdPrtAuthority : https://login.microsoftonline.com/xxxxxxxxxxxxx

EnterprisePrt : YES

EnterprisePrtUpdateTime :

EnterprisePrtExpiryTime :

EnterprisePrtAuthority :

+----------------------------------------------------------------------+

| Diagnostic Data |

+----------------------------------------------------------------------+

AadRecoveryNeeded : NO

KeySignTest : MUST Run elevated to test.

+----------------------------------------------------------------------+

| Ngc Prerequisite Check |

+----------------------------------------------------------------------+

IsDeviceJoined : YES

IsUserAzureAD : YES

PolicyEnabled : NO

PostLogonEnabled : NO

DeviceEligible : NO

SessionIsNotRemote : YES

CertEnrollment : none

PreReqResult : WillNotProvision

DNS

DNS performance is fundamental for things within the Windows OS to work since it talks to the enterprise network as well to the rest of the world. DNS must be fast performing but also consider security. I have seen a lot of hybrid environments using Windows DNS and Infoblox but they were not originally architected with the amount of

DHCP

Always remember that virtual desktops can burst in capacity. When using PVS or MCS for Citrix or Horizon Composer or Instant Clones for VMware, machine identity is handled by the orchestration engine but your Infoblox, for example, may not have a DHCP scope to handle VMs being down for weeks and coming back up. You may run out of IPs very quickly if DHCP is still holding on to them.

Brokering

This is where Citrix, VMware, and Microsoft come in. Which vendor does VDI connection brokering the best? That’s something no one can tell you without knowing more about your company and users, what you want to use VDI for, and how you intend to lay it all out. Some of these vendors do things better than others and it’s not like they are standing still…each one is improving their solutions every day just like you go into work and try to improve your environment every day. I try my best to take an agnostic approach when recommending a solution but I tell everyone I talk to, don’t just listen to me or others blindly. Do your due diligence and formulate your own conclusions with all the latest facts on these solutions. When considering your brokering solution, clear your mind and go into it with a clean slate. What you saw a vendor doing 10 years ago based on some negative experience you had is not what they are doing now so you need to let go of all those preconceived notions. Start from 0 and research modern brokering from each vendor. Use the community comparisons and annual smackdown papers and surveys put out to help you understand what you need to be looking at.

Consider external and internal brokering requirements. Gateway solutions from all vendors are important. Some are more capable than others. Understand what features you need from each vendor. Also keep in mind building a gateway solution on-prem is not the same as doing it in cloud-based IaaS where you don’t have access to 0s and 1s at the network level like you would on-prem. The most common items that come up in IaaS are around doing high availability or global load balancing of gateway solutions.

There are also newer vendors (some born in the cloud vs. ported over from traditional on-prem roots) that are targeting both the Enterprise and SMB space with less complex brokering. This often means not as feature rich as what Citrix, VMware, and Microsoft have built the last 30+ years in this industry but still if it fits your needs you should look at them. Amazon Workspaces, Parallels, Workspot, Frame (now Xi Frame since Nutanix bought them), are just a few names you may see come up.

Remoting Protocol

The Protocol Wars! Yes, it continues. Most all brokering protocols support both UDP and TCP now. UDP is preferred over TCP for remoting protocols these days. The vendors that don’t support UDP yet in certain deployment scenarios are looking toward developing it on their road map. Remoting protocols are constantly changing and there are many comparison articles and videos out there. If you want to do your own comparisons I highly suggest downloading the Remote Display Analyzer tool (http://www.rdanalyzer.com/) and testing yourself. REX Analytics (https://rexanalytics.com/) is also a great tool if you want to get into some automated testing. The 3 big remoting protocols to consider are:

- Citrix VAD – HDX Adaptive Transport using Enlightened Data Transport (EDT) Protocol

- VMware Horizon – Blast Extreme Adaptive Transport using Blast Extreme Display Protocol

- Microsoft WVD and RDS – Remote Desktop Protocol (RDP) with RemoteFX being an option for RDS only

GPUs

Do you need GPU or vGPU for heavy engineering type users? Or will taxing the CPU of the VM be enough for these workloads? Should you present the virtual desktop itself with a slice of a video card or is it better to do that on a server and simply stream it into the virtual desktop thereby having a common virtual desktop sizing for all users?

NVIDIA has been the gold standard for GPU accelerated VDI since around 2006. GRID is the term you need to know, it allows a single GPU to be carved up and shared with multiple virtual desktops using profiles. AMD RapidFire and Intel Iris Pro are also supported by most VDI brokering vendors now (Citrix, VMware, etc) but NVIDIA has had the majority share of both on-prem and cloud VDI workloads for some time now. For VDI use cases, NVIDIA GRID enabled cards are often used for graphics-intensive use cases such as:

- Designers in modeling or manufacturing settings for CAD (Computer-aided design), think Autodesk AutoCAD.

- Architects for a design setting, think Solidworks.

- Higher Education, think MATLAB, AutoCAD, Solidworks.

- Oil and gas exploration — ding ding, I live in Houston so guess what I use NVIDIA GRID for the most? For example, Esri ArcGIS and Schlumberger Petrel are pretty much the standard everyone uses NVIDIA vGPU capability with and extremely easy to configure. Any type of mapping, geocoding, spatial analytics, modeling, simulations, etc. used by GIS teams and Geoscience teams renders visualizations so much better with GPU acceleration.

- Clinical staff in hospitals or healthcare organizations — ding ding, another big use case living in Houston with the world-renowned Texas Medical Center. Epic, Cerner, Allscripts, McKesson, or any EMR and EHR can benefit from GPU acceleration. Radiology and other medical imaging staff love NVIDIA GRID for PACS (picture archiving and communication systems).

- Power users and knowledge workers who need the ability to run some random heavy visualization app that a GPU can help perform better. This turns the NVIDIA GRID from a specialty VDI use case to general-purpose VDI. These days the demands of Windows 10 and Office alone have started some on the path of GPU for general-purpose VDI.

NVIDIA Kepler architecture (GRID K1 and GRID K2 cards) are the older cards under GRID 1.0 where GRID vGPU was built-in. These can no longer be purchased but I still have many customers using them. Kepler was replaced in favor of Maxwell architecture (Tesla M60, Tesla M10, and Tesla M6 cards) that introduced GRID 2.0 where GRID vGPU is a licensed featured using a GRID License Manager server and is annual or perpetual subscription-based as most things in IT are these days. This was often affectionately called the “vGPU tax” by VDI engineers several years ago but has come to be accepted now. There are 4 types of GRID 2.0 licensing:

- GRID vApps (aka NVIDIA GRID Virtual Applications) = used for SBC (server-based computing) like RDSH, Citrix Virtual Apps (XenApp), VMware Horizon Apps. Concurrent user (CCU) model of licensing, per GPU licensing is not available.

- GRID vPC (aka NVIDIA GRID Virtual PC) = used for VDI (virtual desktops) using Citrix Virtual Desktops (XenDesktop), VMware Horizon, Microsoft WVD, etc. Concurrent user (CCU) model of licensing, per GPU licensing is not available.

- QUADRO vDWS (aka NVIDIA Quadro Virtual Data Center Workstation) = for workstations and advanced multi-GPU capability. Concurrent user (CCU) model of licensing, per GPU licensing is not available.

- NVIDIA vCS (aka NVIDIA Virtual Compute Server) = for HPC (high-performance computing) use cases as well as AI (artificial intelligence) and DL (deep learning). This is the only licensing model that supports per GPU licensing.

You may also come across Tesla P4, Tesla P6, Tesla P40, and Tesla P100 cards. These are Pascal architecture cards instead of Maxwell and are not intended for general purpose VDI. There is also the NVIDIA T4, Quadro RTX 6000, and Quadro RTX 8000 cards that are Turing architecture-based cards which are also not intended for general purpose VDI so don’t get confused and buy the wrong cards for your environment. Lastly, there’s the Tesla V100 which is a Volta based card and is an amazing piece of architecture with 5120 NVIDIA CUDA Cores and 640 NVIDIA Tensor Cores! It is in no way meant for VDI, purely high-performance computing, AI, data science, deep learning, next-level “science fiction becoming reality” stuff. 🙂

Be prepared to pay as GRID licensing is expensive not to mention you will have less density and more power/cooling costs when you do GPU in your datacenter at scale. You will need to make decisions between using GPU virtualization (shared graphics aka carving out the card for the most user density) vs. GPU passthrough (a dedicated card per VM or user). The most current NVIDIA GRID offerings for on-prem are now Maxwell-based cards. These are:

- Tesla M10 – for rack servers, offers the highest user density, most popular card I use.

- Tesla M60 – for rack servers, super high performance with the most NVIDIA CUDA cores, has active cooling in addition to the usual passive cooling due to the higher power usage.

- Tesla M6 – for blade servers, low density, I don’t see these used as much.

You can also use NVIDIA backed VMs in a consumption model using the public cloud. Very easy to deploy and you just install the latest NVIDIA GRID driver for your OS and you will be in business (you can use any Windows Server OS or Windows Desktop OS – from Server 2008 R2 through Server 2019 and Windows 7 through Windows 10). In Microsoft Azure you can select from 2 types of VMs:

- NV series VMs – uses Tesla M60 GPU, does not use Premium Storage so most people are moving off of these VMs.

- NVv3 series VMs – uses Tesla M60 GPU, uses Premium Storage and Premium Storage caching, can do higher vCPU and RAM, and is considered the new standard for VDI in Azure.

DO NOT use the below VM types in Azure for VDI. These are meant for HPC (high-performance computing), AI, deep learning, etc. and is not going to be very cost-effective nor tuned for EUC use cases:

- NC-series VMs – Tesla K80 GPU driven VMs, does not use Premium Storage or Caching so most people are moving off of these VMs.

- NCv2-series VMs – Tesla P100 GPU driven VMs, basically double the NC series in performance, uses Premium Storage and Premium Storage caching

- NCv3-series VMs – Tesla V100 GPU driven VMs, almost double the performance of the NCv2-series, uses Premium Storage and Premium Storage caching

- ND-series VMs – Tesla P40 GPU driven VMs, for spanning multiple GPUs, uses Premium Storage and Premium Storage caching

- NDv2-series VMs – Tesla V100 NVLink Fabric multi-GPU driven VMs, 8 GPUs interconnected with 40 vCPU and 672 GB RAM as the basic VM size, uses Premium Storage and Premium Storage caching

In AWS, you can use the Amazon EC2 G3 instances for VDI. These are backed by Tesla M60 GPUs as well and can do a larger amount of vCPUs than the NVv3 series VMs in Azure. This can change any day so don’t make your public cloud GPU backed VDI decisions without checking what’s available and what your needs are first if you have a multi-cloud VDI strategy.

Application Delivery Strategy – Masking, Layering, Streaming, Hosting, Containerizing, Just in Time

Masking, Layering, Streaming, Hosting, Containerizing, Just in Time…how many ways can we deliver an app into a single image VDI environment? Ensuring single image management technologies are used correctly and you actually have 1 single master image for the OS is something some companies struggle with. They have to make exceptions for certain

- Installed = Basically installing 100’s of apps baked into one image like it was the late 2000s again. Or maybe you decide you can do it with 2 images, then 3, then 4 and suddenly 10…image sprawl. Good luck with that.

- Masking = Microsoft FSLogix App Masking

- Layering =

- Citrix App Layering (aka Unidesk — which uses VHD or VMDK mounting)

- VMware App Volumes (aka CloudVolumes — which uses VMDK or VHD mounting)

- Liquidware FlexApp (which uses VHD or VMDK mounting)

- Microsoft MSIX AppAttach (coming soon — which uses VHD mounting)

- Streaming = This is legacy app streaming, look at “Just in Time” further below for more modern options

- Microsoft App-V (basically on its way out and I no longer recommend using)

- Citrix Application Streaming (deprecated in 2013 in favor of App-V)

- Hosting = Also referred to as published apps or hosted apps. This is delivering an app from a Server OS over a remoting protocol into the VDI session which is already being delivered via remoting protocol, so a double-hop. This a very mature and prominent app delivery method to “webify” Windows apps in most Fortune 50 environments.

- Citrix Virtual Apps

- VMware Horizon Apps

- Microsoft RDSH and RemoteApp (now really a part of Microsoft WVD)

- Containerization =

- Turbo

- Cloudhouse

- Numecent

- VMware ThinApp

- Microsoft App-V (basically on its way out and I no longer recommend using)

- Just in Time (JIT) = On-demand delivery of app execution bits.

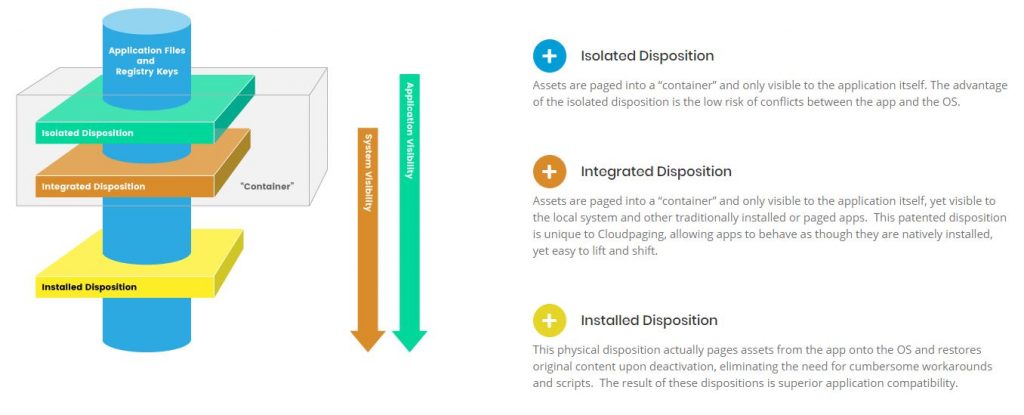

I love this one image from Numecent’s website (https://www.numecent.com/) because I feel it captures app dispositions really well in a very generic way that can be applied to whatever app delivery strategy or combination of app delivery strategies (which is more common in the field) you choose. It’s a simple at a glance understanding of modern app delivery that allows you to disconnect apps from the underlying Windows OS:

Application compatibility is often brought up as part of your application delivery strategy. At one point in time, it was the EUC Engineer’s duty to figure out which legacy apps can be delivered through virtualization and remoting and which had to remain on physical endpoints. These challenges have been around across verticals and came to ahead as we moved from 32-bit to 64-bit architectures. Solutions like AppDNA and ChangeBASE were in use to fingerprint legacy apps and give you ammunition to take back to business owners for the need to modernize apps. Over the years, the need for this has diminished. Even legacy apps can be containerized for the most part to avoid these compatibility issues. Additionally, Microsoft has the App Assure program where they will fix your legacy Windows apps and websites to work with Windows 10 and Edge for free. This benefit extends to Windows Virtual Desktop as well: https://www.microsoft.com/en-us/fasttrack/microsoft-365/app-assure

Image Management & Automation

If you are new to VDI your first instinct may be to just clone a physical box as your baseline virtual desktop image. You DO NOT clone your physical image onto a virtual desktop just to see what happens…ever. I’ll tell you right now you are wasting your time. Build your virtual desktop master images from scratch using optimization tools and automation.

Master images should not be built by hand. You are introducing human error and you will never be able to replicate it. People used to call master images “gold images” but then they quickly found out this was a lie. Your image is not gold. The first time you crack your image and update it you are introducing variables. Now you have a “silver image”. Then “bronze”, then “lead”, all the way down to “dirt”. Your gold image is crap the first time a human touches it and it continues to degrade until the image barely works anymore. Master images need to be automated so as to not introduce human error and ensure you are getting the same replicable master image every time. All updates and changes to a master image should be automated, never by hand if you can help it.

Infrastructure needs to be treated as code. You almost need to take a DevOps like approach to image management and automation. If you don’t know how to get started with automation go read up on Automation Framework from my friend Eric (Trond) Haavarstein who is a fellow CTP, EUC Champion, and MVP: https://xenappblog.com/virtual-automation-framework-master-class/. Many companies choose to use tools like HashiCorp Packer and Chocolatey for application packaging to help with automated image builds. You can integrate the community-maintained Evergreen PowerShell module to pull the URLs for new versions of enterprise software from their official sources as well. There are also deployment automation tools like Spinnaker and Octopus Deploy some organizations use. Continuous configuration automation can be done using tools like Chef, Puppet, HashiCorp Terraform, Red Hat Ansible, or SaltStack. This continuous integration and continuous delivery/deployment is called CI/CD. You build multiple CI/CD pipelines in the tool of your choice to maintain a DevOps strategy for your EUC environment. It takes genuine work and it will be slow for most companies starting out with image automation and automated deployments, but I promise you it gets better as you learn and grow these skills in your organization. The results are very rewarding with the amount of tedious manual work hours your company will save and the agility your company will gain.

Having automated image management means changes are now reviewed easily by your team. People don’t have to store a lot of the tribal knowledge of what has been done to an image in their head or in Word docs anymore, everything is in config files for all to see. The burden of documenting changes is gone since it’s all part of the image release process now. Without the tedious busywork anymore, your team can now work on more important aspects of your VDI environment that provide actual business value.

Master images should be versioned and stored centrally for easy rollback and distribution. Ensure typical backup policies in your organization are in effect. It goes without saying but I have come across companies where master images weren’t being backed up.

Some core applications need to be installed in your image rather than using an application delivery mechanism. You need to minimize the number of apps you have automated into your master image and the rest should be delivered via an app delivery mechanism I outlined in the earlier section. Then you need to verify these few core apps have been correctly installed into your master image using the Pester PowerShell module which is already part of your Microsoft Windows 10 or Server 2016 operating system and higher. Your base image/master image is the foundation of your VDI environment so it needs validation and error detection prior to sealing and using that image with your end-users. There is a great article here by fellow CTP Matthias Schlimm showing how to set this up: https://eucweb.com/blog/1886

Another free tool to build your base images that is picking up a lot of steam in the EUC community is OSDBuilder. This is a PowerShell module to help you programmatically build your base OS image in a repeatable fashion with Windows Updates included. There is an excellent YouTube video here demonstrating it: Using OSDBuilder PowerShell to Create a Windows Server 2019 Reference Image!

Modern desktop management has some concepts that don’t work well with Windows 10 virtual desktops presently:

- Azure AD Join + Intune MDM

- Conditional Access

- Public or private store apps

- Windows Autopilot

They are not necessary in most cases with VDI anyhow. Single image management using MCS or PVS with Citrix VAD, Linked Clones or Instant Clones with VMware Horizon, and host pools with Microsoft WVD in Azure don’t need these things.

Windows OS Optimization – Forget what you THINK you know about Windows

Turning off unnecessary Windows services aimed at physical machines may sometimes increase performance and infrastructure security posture, but it can also lead to poor user experience so be cognizant of this. Example: Turning off Windows visualizations can increase performance and density but looks like a kiosk from the 1990s.

Instead of guessing how to optimize your image, use what the experts do. I love to use the following personally:

- Citrix Optimizer – https://support.citrix.com/article/CTX224676

- VMware OSOT (VMware OS Optimization Tool) – https://labs.vmware.com/flings/vmware-os-optimization-tool

- Microsoft WVD VDI optimizations script – https://github.com/TheVDIGuys/Windows_10_VDI_Optimize

- BIS-F (Base Image Script Framework) for sealing – https://eucweb.com/

You can even run them one right after another and I haven’t seen any issues to date. Keep in mind these optimizations are not a magic bullet. It is up to you to use these as baselines and see if there is something that MUST be turned back on in your environment. Don’t guess to save time or do something just because you have historically done it in your other computing environments you manage. Just do the baselines, analyze the results, then make a decision if something needs to be flipped back on.

Great resources/step-by-step guides:

- Creating an Optimized Windows Image for a VMware Horizon Virtual Desktop – https://techzone.vmware.com/creating-optimized-windows-image-vmware-horizon-virtual-desktop

Further, there are things that you need to do yourself to improve the most critical part of the VDI user experience, the login speed. Fellow CTP James Rankin has one of the most comprehensive guides on this topic:

https://james-rankin.com/articles/how-to-get-the-fastest-possible-citrix-logon-times/. I promise you if you follow this guidance you will achieve 6.5 second logins with Windows 10 and 5.2 second logins with Windows Service 2016 as James describes. I have personally used many of these optimizations to get 7 second logins with Windows 10 with great ease in my career. If a virtual desktop login is not under 10 seconds, I am not happy and there’s work to be done.

Web Browsers

Guess what most all your users do in VDI all day? 2 things: browse the web and email people. If you can’t get these 2 critical things right, your VDI environment has failed.

Always add a 3rd party browser in your image. I typically used Chrome for Enterprise. Usage can be controlled via Application Control and policy can be laid down with EM. It can plug right into your ITSM solution if you need to justify business use cases for the users that need it.

If you think Internet Explorer is still an enterprise browser and lead with that in your environment, good luck to you. IE is a legacy artifact because of internal web apps that never progressed. It should be a tier 2 browser in organizations for compatibility reasons. It should never be the tier 1 primary browser for the company. Edge was supposed to change that and be tier 1 but was a mess, and now Microsoft is circling back trying to change that with a Chromium-based approach.

So for those of us that live in reality and like modern websites to work with a modern web browser, Chrome Enterprise must be included in your virtual desktop.

Web Browser Isolation

Beyond just choosing the right installed browsers, you also want to be mindful that they are the biggest threat vector within a virtual desktop. From security issues with the browser itself to some link your user ends up clicking on that slips past your web proxy controls and actually renders. For this reason, look into isolating the browser itself from the Windows 10 OS. You can do it yourself as part of your application deployment strategy using things like containerization, streaming, etc. or you can hit the easy button and use a cloud-based browser service to accomplish this.

Clients

Users use pretty much any device form factor these days for business. It’s a combination of desktops, laptops, mobile devices, tablets, thin clients, and web browsers. Most mature EUC vendors support web browsers for access to virtual desktops these days. So no need to install a separate client for the remoting session.

The device posture doesn’t matter too much these days with a remoting protocol and the various protection layers you deploy with a virtual desktop. Pretty much all my customers use both managed and unmanaged BYO devices with their virtual desktop environments.

If you do decide to deploy thin clients, I highly suggest looking at IGEL. It is hands down the best management experience I’ve ever seen. If you’re still using Dell WYSE and HP here, you are wasting your time in my opinion. Once you try IGEL you won’t want to deploy anything else. Their UD Pockets are so cheap to deploy I have some customers that pass them out like candy to their users.

Printing

The bane of remoting protocols! When you are in one location and the computing environment is another, where do you print to? That’s the first question that comes up followed by many more deeper questions as you begin to unravel the ball of user requests that is printing. How can I print to my plotter in such and such office if it’s not the default printer that is being mapped, how is that custom large format print driver handled, etc. You had to resort to ScrewDrivers back in the day to reign things in. The remoting vendors themselves have done a great job solving all the intricacies these days with universal print drivers so I don’t have to do much with printing at all beyond mapping the default printer upon session brokering via a simple policy usually. Printing isn’t the nightmare it was 10+ years ago. With that said, for advanced enterprise printer management I still recommend the following vendors to look into and see if they fit your business:

- Tricerat (maker of ScrewDrivers) – https://www.tricerat.com

- ThinPrint – https://www.thinprint.com

- PrinterLogic – https://www.printerlogic.com

Application Process Control & Elevation

Ivanti Application Control (

I have been in environments where CryptoLocker or some other ransomware variant ran amock on physicals but the virtual desktop environment was immune due to Trusted Ownership policy from Application Control.

Microsoft also has AppLocker but it is nowhere near as robust as Application Control in my opinion. If you have a simple VDI environment, then AppLocker may be perfect for your needs. Additionally, there is the newer Windows Defender Application Control (WDAC) that at the moment can compliment AppLocker and likely someday become one solution. This can lead to a much more robust solution. As of now, there are no guides available specific to non-persistent VDI or WVD but over time this will be sure to change.

Sometimes users do need to run elevated actions. YOU SHOULD NEVER give them admin rights to anything. Use Ivanti Application Control to elevate privileges for just those actions and child processes. You can even build a workflow that requires approval for these actions.

Operating System Hardening

OS optimization tools I discussed earlier do not harden your operating system. Their intent is to improve performance and user experience. In reducing unnecessary Windows services, yes, sometimes you get the benefit of a reduced attack surface as a side effect. But that does not harden your OS. You need to purposefully take measures to harden the OS yourself.

My good friend and fellow CTP Dave Brett wrote an excellent guide on blocking malicious use of script hosts and command-line shells like PowerShell in a series of articles on ethical hacking for VDI/EUC environments here:

- Secure Powershell In Your EUC Environment – https://bretty.me.uk/secure-powershell-in-your-euc-environment/

- Secure Unquoted Service Paths in your EUC Environment – https://bretty.me.uk/secure-unquoted-service-paths-in-your-euc-estate/

- Secure and Minimize Lateral Movement In Your EUC Environment – https://bretty.me.uk/secure-and-minimize-lateral-movement-in-your-euc-environment/

- Secure Local Drive Access On Your EUC Endpoints – https://bretty.me.uk/secure-local-drive-access-on-your-euc-endpoints/

EDR – Endpoint Detection and Response

Anti-virus, malware, and other endpoint threat management need to be VDI aware. The anti-virus solutions that go on your physical desktops will destroy VDI and SBC environments. EDR (endpoint detection and response) usually doesn’t understand non-persistent environments or multi-user systems. They will scan like crazy and attempt to pull in updates to 1,000s of systems not realizing once the user logs off the system comes back up clean and the process will start all over again. Your VDI environment will be stuck in an update loop.

Many vendors started offering VDI aware AV that can do offloaded scanning and understand it’s a dynamic VM that is spun up from a master image that was already scanned and cleared prior to sealing the image. Some vendors are better than others.

Bitdefender HVI is great if you run XenServer and want hypervisor introspection that does not require an agent on the VM but hardly anyone uses XenServer in my region. Many of my VMware centric customers use TrendMicro Deep Security for this which has hooks into VMware vShield. Bitdefender GravityZone SVE (Security for Virtualized Environments) on the other hand, is solid gold regardless of hypervisor on-prem or deploying to cloud (Azure, AWS, GCP, etc) where you won’t have hypervisor level access. This or Windows Defender with a slim config is what I most commonly use.

I also want to commend the Bitdefender Product Management team. They are one of the few anti-virus vendors that embraces non-persistent virtual desktops and designs solutions for these environments. Non-persistent VDI is not an afterthought for them. Their PMs are very knowledgeable and receptive to the needs of non-persistent VDI so I highly recommend taking a look.

Windows Defender is a solid offering and can hook into Microsoft Defender ATP (Advanced Threat Protection) in Azure which gives you a much better overview of your environment. Unfortunately, it’s not quite non-persistent VDI aware so there are some caveats. We are seeing great progress every quarter with Defender ATP and it may someday become a standard for VDI very soon. Microsoft is improving Windows Defender to be more compatible with non-persistent VDI and has released some good basic configuration guidance here: https://docs.microsoft.com/en-us/windows/security/threat-protection/windows-defender-antivirus/deployment-vdi-windows-defender-antivirus.

Microsoft is also working to make Microsoft Defender ATP (previously known as Microsoft Windows Defender ATP) more non-persistent VDI friendly and has released configuration guidance here: https://docs.microsoft.com/en-us/windows/security/threat-protection/microsoft-defender-atp/configure-endpoints-vdi

Other non-persistent VDI aware anti-virus solutions I have used are Symantec Endpoint Protection with Shared Insight Cache, McAfee MOVE, and a slew of other with varying degrees of success since VDI is mostly an afterthought for them. Some have been getting better over the years, however.

There are some vendors who claim they may work with VDI simply because it’s Windows 10 based. Be cognizant of performance issues caused by these solutions as they may have been designed with physical machines in mind. Test your image without the solution as your baseline, then install the solution and re-test. You will often find metrics provided by ControlUp, Director, LoginVSI, etc. are enough to prove the performance impact of the solution and can get you an answer very quickly whether or not the solution is a fit for your VDI environment. Vendors often say something is supported but purposely don’t say what the performance impact is, as that can vary by environment. You must verify yourself on your own hardware or cloud tenant your workloads are in as every environment is unique. Vendors are always releasing new versions of their solutions so what you may have heard in the past from someone may be out-dated so it is worth testing yourself. Some solutions that many in the EUC community and myself have had to be performance-oriented on when working with and making decisions are Cylance, Tanium, and Carbon Black which are very popular solutions for physical machines and often asked to be run in VDI environments. In some cases, scaling compute on your virtual desktops, i.e. CPU, RAM, disk speed, can help alleviate issues that may be observed. Of course, you need to be cognizant of the increased costs in doing so when making these decisions.

Some of the anti-virus requirements I would suggest looking for when you choose an AV solution for your non-persistent VDI environment:

- Cloned machine aware. Will know GUID is identical across 1,000s of machines since it’s all spun up off the same master image.

- Destroyed machines (de-provisioned upon user logoff) are not orphaned in AV console machine inventory.

- Know that a typical virtual desktop VM is 2 vCPU and 8 GB RAM and will not impact user performance and system latency negatively (login, profile load, policy, shell, web browsing, etc.). It should work to reduce CPU, RAM, disk I/O, and network load on the system.

- Realize VM is from a master image and not scan those already scanned areas across 1,000s of machines needlessly. This can kill your VDI environment locking up your host resources. It needs to be able to cache scan results and share among all 1,000s of virtual desktops.

- Not attempt to do definition updates upon user login to 1,000s of machines that will be destroyed after user logoff. Need to prevent update loops.

- Perform offloaded scanning, as in not use the machine’s vCPU for scanning but an ancillary vCPU from a different system or appliance near to the virtual desktop VM. This decouples the scan engine and threat intel duties from the VM to this ancillary system.

- Preferably auto-detect your VDI environment and apply the most up to date recommended exclusions from the vendor (Citrix, VMware, Microsoft, etc) so you don’t have to keep on top of it.

Windows Firewall is commonly turned off in many environments to eliminate

Please don’t forget about anti-virus exclusions. You will run your VDI environment into the ground without doing your exclusions:

- Citrix Endpoint Security and Antivirus Best Practices –

https://docs.citrix.com/en-us/tech-zone/build/tech-papers/antivirus-best-practices.html - VMware Antivirus Considerations in a VMware Horizon 7 Environment –

https://techzone.vmware.com/resource/antivirus-considerations-vmware-horizon-7-environment

PAM – Privileged Access Management

Privileged Access Management (PAM) is extremely important in enterprise environments and a VDI environment has specific requirements to ensure it is compatible with PAMs. Something like 80% of security breaches involves privileged access abuse. Attacks to grab login credentials out of memory using Mimikatz is something many of us know about. Even taking passwords from Active Directory Group Policy Preferences exposed in XML files in SYSVOL directories. We also have to worry about Pass The Hash attacks that can allow the bad guys to move laterally around the entire network using the same local administrator system passwords.

You need to consider vaulting master image related credentials in an enterprise PAM solution such as CyberArk, Centrify, or Thycotic. I see many companies with teams who use various consumer or open source password vaults which is better than a spreadsheet or text file but still not the most ideal scenario for centralized and traceable access and usage of credentials. There’s no accountability if IT does not centralize this.

As an additional layer of

Gateway – Traffic Management and Security

Your gateway into the VDI environment is critical. This is how the remoting protocol is able to communicate securely between the client and the virtual endpoint. You need to consider placement as close to the users as possible. Many companies have multiple gateways. In Citrix environments, for example, these are Citrix Gateways (NetScaler Gateways) as either physical or virtual appliances. These can be load balanced globally with intelligence built-in to direct the user to the closest and best-performing gateway at a given point in time. In VMware environments, you can use Unified Access Gateway (UAG) virtual appliances or Horizon Security Server on Windows.

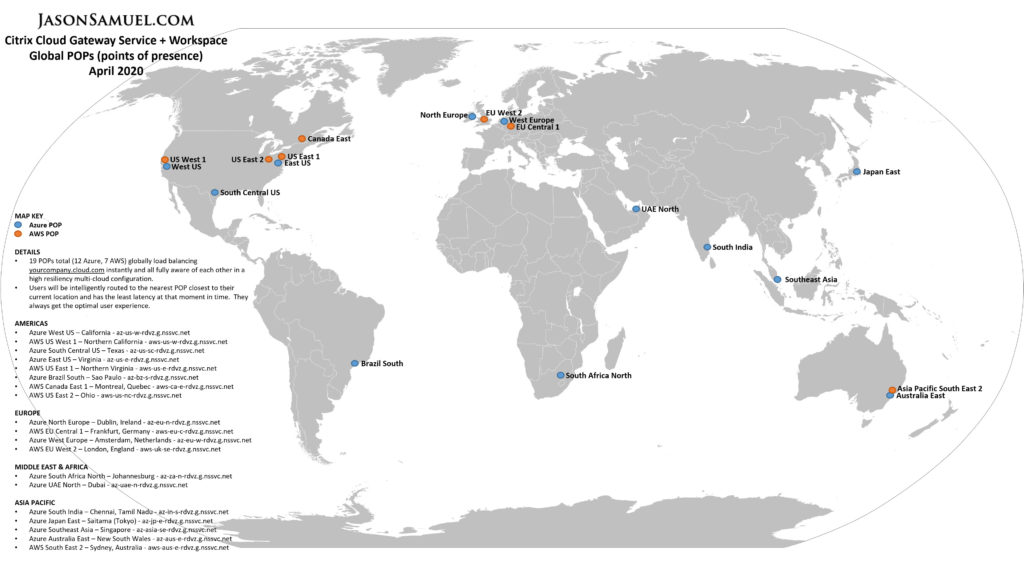

If you choose to use a PaaS (platform-as-a-service) gateway such as the Citrix Gateway Service powered by Azure and AWS in Citrix Cloud or RD Gateway powered by Azure Traffic Manager in Microsoft WVD, you get a lot of benefits you may want to consider such as pain points in administration overhead offloaded to the provider, global scale and availability, not having to worry about DR ever again as it’s always active, always up to date and patched from a security perspective for less attack surface against vulnerabilities/exploits, support for both on-prem and cloud-hosted workloads, etc. It’s fairly transparent to you and is just there ready to go as part of the service with zero to minimal initial configuration on your part. The benefits go on and on with this approach and should be considered if it’s a good fit for your organization. At this time, the Unified Access Gateway in VMware Horizon Cloud Service is not a PaaS service but I’m sure it will follow suit with an offering at some point.

Example of Citrix Gateway Service global POPs (points of presence) instantly available to you:

Your gateway is the ingress point into your environment. Be cognizant of the latest TLS cipher and protocol recommendations. Use Qualys SSL Labs to periodically check your TLS settings. Right now TLS 1.2 may be the standard but I have been moving some items behind TLS 1.3 since 2017 back during the RFC drafts. My website, for example, has been using TLS 1.3 since mid-2017. TLS 1.3 was finalized in RFC 8446 in August of 2018. Some compliance requires you to be on certain TLS versions so catch these in your internal audits before they become an external audit problem. I foresee a TLS 1.3 requirement coming from governing bodies very soon as more clients finalize their support for it.

One quick fact I’d like to share when thinking about gateway appliances. Citrix ADC (previously known as NetScaler) was the first ADC vendor to officially support TLS 1.3 back in September 2018:

https://www.citrix.com/blogs/2018/09/11/citrix-announces-adc-support-for-tls-1-3/. Out of all those “middleboxes” that power the Internet, Citrix ADC was the first to embrace TLS 1.3 in a production capacity. When you think Internet security and stepping up to the challenge of modern threats, you always want to think about which vendors are riding the cusp of change. That choose to invest in development cycles internally on meeting these threats. My pet peeve is vendors that rest on their laurels and be reactive to the world. The Citrix ADC engineering team chose to not sit around and I applaud them for being the first of their peers in the industry to embrace and deliver on TLS 1.3 support. You always want to examine which companies are forward thinking and which are reactive when it comes to critical components like gateways on your perimeter.